Measuring Success

Thomas Cummings - 24th May 2024

When evaluating project success the key factors to be considered were identified by, Lockhart (2023) as cost, timeline, quality of deliverables and customer satisfaction. While there isn’t a considerable concern regarding the budgetary aspect, the other factors are relevant to the research project especially with the strict fixed deadline that must be achieved as well as the requirement to produce a functioning project deliverable and written report. Further information regarding the project aims and objectives regarding the deliverable can be found here. The “project evaluation should ideally be carried out at all stages of its implementation” (Paturyan, 2024) in order to ensure that the feedback from evaluation can be used to amend the project management documents, such as the Gantt chart, and therefore bring the project back on track. Further information regarding the project management tools used in the project can be found here. One method for ongoing project evaluation is the schedule variance metrics which are “the difference between the work planned and completed at a given time. […] Monitoring this metric throughout the project allows teams to identify potential bottlenecks and make necessary adjustments to meet deadlines.” (Lockhart, 2023). However given the linear waterfall nature of this research project, a schedule variance analysis covering the whole project is difficult to complete as tasks are generally worked sequentially and as such any delays in the current task are likely to have a knock on effect. As such foursquare visualisation is also less useful as it considers which of multiple tasks are ahead of schedule, on schedule or behind schedule however elements of stoplight visualisation are used as part of the Gantt chart with overdue tasks highlighted to show their importance. While metrics for evaluation of the entire project are useful to some extent, there is a greater focus on time deadline KPI’s for the individual and principal activities which need to be achieved throughout the project to ensure the final deadline is achieved. This falls within the risk management strategy of the project as failing to meet the deadline would be a severe risk which must be mitigated as a priority through diligent planning and continued monitoring of progress as discussed above. In addition to the time focused targets throughout the project there is also a requirement for ongoing evaluation of the individual machine learning models through hyperparameter optimisation. This is important to ensure that the technical performance of the models are optimised and requires evaluation of metrics including accuracy, precision, recall and F1 score for each model iteration. Continued model optimisation will look to provide the most accurate model possible whilst also taking into account the processing requirements for training and model implementation and the models will be benchmarked against the average accuracy of 70% as achieved in the research by Gant et al. (2016) for manual visual classification.

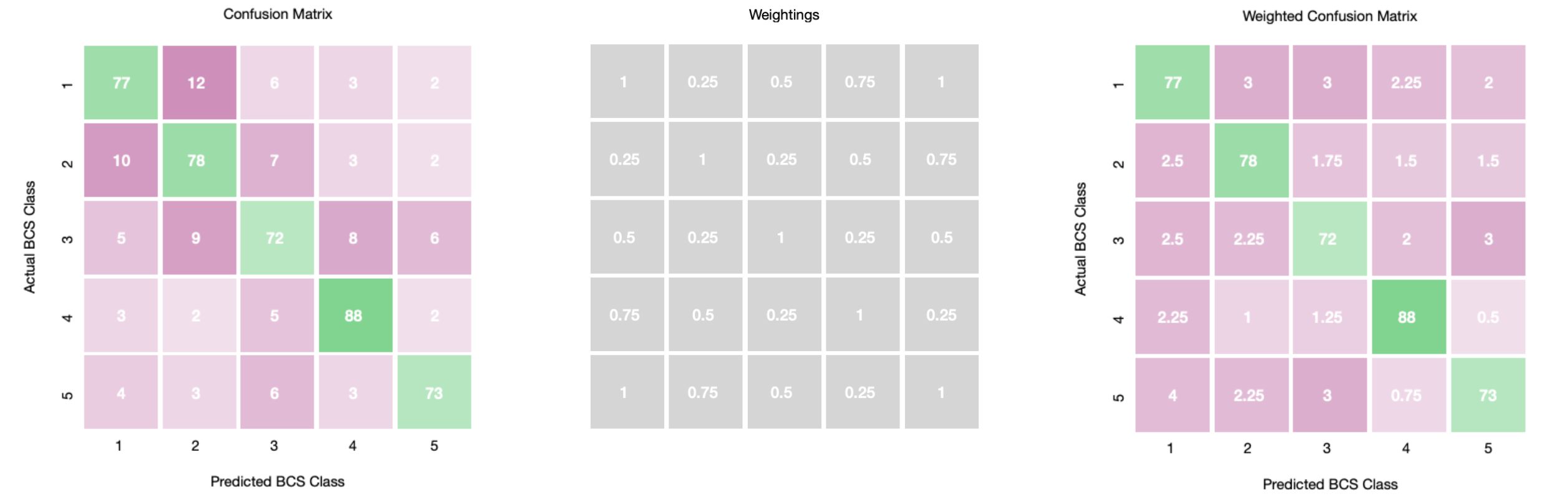

Figure 1 - example weighted confusion matrix

A confusion matrix can also be plotted to consider the percentage of correct predictions and to visualise the distribution of incorrect predictions. A weighted version shown in figure 1 can also be useful given that predictions further from the correct prediction are more concerning than those in the adjacent class bracket. While the weightings shown are useful when comparing only the incorrect predictions, if the evaluation metric is also comparing the incorrect classifications to correct classifications, other weightings should be considered such that the overall evaluation and level of incorrect classification isn’t affected. On completion of the project there are multiple elements in evaluating overall success. The easiest metric to determine is whether the project met the submission deadline as this is a fixed date without any scope for late submission and as such this would be a failure of the research project and would not be acceptable. A more nuanced metric alongside this, will be the functionality of the project deliverable as this has a wider scope for project slippage as the process of evolution throughout the project will allow the boundaries and scope to change as required. Despite this the success of the project will still require a functioning machine learning model to classify Labrador retriever images as the minimum viable product along with an analysis of the cross breed generalisability. A final metric will be the result achieved from the written research assessment however this will be determined by a third party based on the strength of the paper and as such will only be confirmed following submission. While the mark is outside of the direct control of the project management, it will be a direct result of the effort in completing the research project and could be considered as a final stakeholder review in determining the overall quality of the research.

References

Gant, P., Holden, S.L., Biourge, V., & German, A.J. (2016). ‘Can you estimate body composition in dogs from photographs?’, BMC Veterinary Research, 12(1). https://doi.org/10.1186/ s12917-016-0642-7 Lockhart, L. (2023). ‘How to evaluate and measure the success of a project’, Float Resources. Available from https://www.float.com/resources/project-evaluation/ Paturyan, A. (2024). ‘Stages of project evaluation: basic principles and useful tools’. Available from https://www.linkedin.com/pulse/stages-project-evaluation-basic-principles-useful-tools-paturyan-49jbf/

Back to Blog